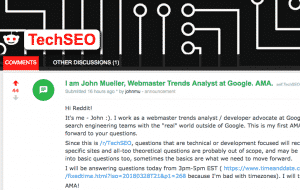

Google’s John Mueller, Webmaster Trends Analyst and Developer Advocate, is responsible for connecting the internal search engineering teams with the real world outside of Google. That means that he responds to questions on Twitter that SEOs and others have. Today, though, he was kind enough to do an AMA (Ask Me Anything) on Reddit. The technical SEO subreddit on Reddit is technical and development focused. So, those were the questions that he responded to first. He took an hour to answer the questions.

Since there were a lot of technical questions, and many of the were very technical, I decided to narrow it down a bit and put together a quick recap and quick takeaways from his AMA on Reddit. Below, I’ve reworded and made the question much simpler and easier to understand. Then, I’ve provided John’s answer.

Question: Paywall and registrationwall/loginwalls show certain content to Google and other content to humans. How should the paywall markup be applied if a site uses metering for flexible sampling?

Answer: By login-wall, I assume you have to log in/sign up to see the content? It’s my understanding that you can use the same markup there, to let us know what’s happening. You can serve the page like that specifically to Googlebot, provided you have the appropriate markup in place. Finding the right balance for metering is something every site has to figure out on its own: too few requests makes it hard for users to realize that you have great content worth signing up for.

I think our docs on this are pretty complete, let me know if there’s anything missing!

Question: Many responsive designs hide certain elements, like top level navigation, for usability purposes.

Answer: Just purely for usability reasons I’d always make important UI elements visible. Technically, we do understand that you sometimes have to make some compromises on mobile layouts (the important part is that it’s loaded on the page, and not loaded on interaction with the page), but practically speaking, what use is search ranking if users run away when they land on your pages and don’t convert? 🙂

Question: How do bots/spammers affect trends and search volume? What do you do to filter out those effects?

Answer: e internet is filled with bots, we have to deal with it, just like you have to deal with it (filter, block, etc.) – it can get pretty involved, complicated, expensive… Heck, you might even be pointing some of those at our services, for all I know :-/. For the most part, it seems like our teams are getting the balance right for filtering & blocking, though sometimes I see “captcha” reports from people doing fairly reasonable (though complicated) queries, which I think we should allow easier. ¯\_(?)_/¯

Question: How do you make a web page more search engine friendly if it’s heavy in SVG animation with no real textual content?

Answer: We’re not going to “interpret” the images there, so we’d have to make do with the visible text on the page, and further context (eg, in the form of links to that page) to understand how it fits in. With puns, especially without a lot of explanation, that’s going to make ranking really, really hard (how do you know to search for a specific pun without already knowing it?).

What I’d recommend there is to find a way to get more content onto the page. A simple way to do that could be to let people comment. UGC brings some overhead in terms of maintenance & abuse, but could also be a reasonable way to get more text for your pages. h, another thing, with inline SVGs, we probably also wouldn’t pick them up for image search. I don’t know if you’d get reasonable traffic from image search to pages like that, but if you think so, it might make more sense to have separate SVGs that you embed instead of inlining them.

Question: Crawl map visualizations. How close are they to what Google actually sees when doing crawling, parsing and indexing of the website?

Answer: FWIW I haven’t seen crawl map visualizations in a long time internally. It might be that some teams make them, but you might have heard that the web is pretty big, and mapping the whole thing would be pretty hard :-). I love the maps folks have put together, the recent one for paginated content was really neat. I wouldn’t see this as something that’s equivalent to what Google would do, but rather as a tool for you to better understand your site, and to figure out where you can improve crawling of it.

Getting crawling optimized is the foundation of technical SEO, and can be REALLY HARD for bigger sites, but it also makes everything much smoother in the end.

Question: Is it necessary to set a X-default for your alternate lang tags? Or is it enough to set a alternate lang for every language/site?

Answer: No, you don’t need x-default. You don’t need rel-alternate-hreflang. However, it can be really useful on international websites, especially where you have multiple countries with the same language. It doesn’t change rankings, but helps to get the “right” URL swapped in for the user.

If it’s just a matter of multiple languages, we can often guess the language of the query and the better-fitting pages within your site. Eg, if you search for “blue shoes” we’ll take the English page (it’s pretty obvious), and for “blaue schuhe” we can get the German one. However, if someone searches for your brand, then the language of the query isn’t quite clear. Similarly, if you have pages in the same language for different countries, then hreflang can help us there.

Question: We see a difference in indexed pages between the old and the new search console. ( https://ibb.co/kZShvS https://ibb.co/gZTY27 ). Is this a bug? Or does it have another reason?

Answer: The index stats in the old & new search console are compiled slightly differently. The new one focuses on patterns that we can tell you about, the old one is basically just a total list. I’d use the new one, it’s not only cooler, but also more useful 🙂

Question: Does Google search rely on data soruces not produced by the crawl for organic rankings purposes? What might some of those be?

Answer: We use a lot of signals(tm). I’m not sure what you’re thinking of, but for example, we use the knowledge graph (which comes from various places, including Wikipedia) to try to understand entities on a page. This isn’t so much a matter of crawling, but rather trying to map what we’ve seen to other concepts we’ve seen, and to try to find out how they could be relevant to people’s queries.

Question: When might we expect the API to be updated for the new Search Console?

Answer: Oy, I hear you and ask regularly as well :-). We said it wouldn’t be far away, so I keep pushing 🙂

Question: When are you going to bring the extended data set to the existing API version? (as hinted in a Hangout in January;))

Answer: As I understand it, the first step is to expand the Search Analytics API to include the longer timeframe.

Past that I’d like to have everything in APIs, but unfortunately we have not managed to clone the team, so it might take a bit of time. Also, I’d like to have (as a proxy for all the requests we see) a TON of things, so we have to prioritize our time somehow :-).

At any rate, I really love what some products are doing with the existing API data, from the Google Sheets script to archive data to fancy tools like Botify & Screaming Frog. Seeing how others use the API data to help folks make better websites (not just to create more weekly reports that nobody reads) is extremely helpful in motivating the team to spend more time on the API.

Question: A/B Testing.

Answer: We need to write up some A/B testing guidelines, this came up a lot recently :). Ideally you would treat Googlebot the same as any other user-group that you deal with in your testing. You shouldn’t special-case Googlebot on its own, that would be considered cloaking. Practically speaking, sometimes it does fall into one bucket (eg, if you test by locale, or by user-agent hash, or whatnot), that’s fine too. For us, A/B testing should have a limited lifetime, and the pages should be equivalent (eg, you wouldn’t have “A” be an insurance affiliate, and the “B” a cartoon series). Depending on how you do A/B testing, Googlebot might see the other version, so if you have separate URLs, make sure to set the canonical to your primary URLs. Also, FWIW Googlebot doesn’t store & replay cookies, so make sure you have a fallback for users without cookies.

Question: How does Google intend to encourage healthy content production on the web given the disruption to the attribution funding model which has powered content creation since, well, the beginning of the printed word?

Answer: I’ll let you in on a secret: the health of the web-ecosystem is something that pretty much everyone on the search side thinks about, and when we (those who talk more with publishers) have stories to tell about how the ecosystem is doing, they listen up. Google’s not in the web for the short-term, we want to make sure that the web can succeed together for the long-run, and that only works if various parties work together. Sure, things will evolve over time, the web is fast-moving, and if your website only makes sense if nothing elsewhere changes, then that will be rough. As I see the SEO/web-ecosystem, there are a lot of people who are willing to put in the elbow-grease to make a difference, to make changes, to bring their part of the world a step further, and not just cement existing systems more into place. (Personally, it’s pretty awesome to see :-))

Question: In the URL parameter tool in GSC, if you create a different property like domain.com and then domain.com/folder, it doesn’t seem to inherit the settings. If I set them differently, which will win, the more specific one (folder) or the one that’s likely to be controlled more centrally in an org (domain).

Answer: Last I checked, the more specific one wins. However, if you don’t know now, chances are you (or whoever comes after you to work on the site) won’t know in the future, so personally I’d try to avoid using too complex settings there. It’s very easy to break crawling with those settings, and extremely hard to figure out if you don’t remember that you have something set up there.

Question: How is keyword cannibalization seen by Google?

Answer: We just rank the content as we get it. If you have a bunch of pages with roughly the same content, it’s going to compete with each other, kinda like a bunch of kids wanting to be first in line, and ultimately someone else slips in ahead of them :). Personally, I prefer fewer, stronger pages over lots of weaker ones – don’t water your site’s value down.

Question: Is it possible to track app indexing at all for iOS?

Answer: Unfortunately, I have no insight into App Indexing anymore since it moved to Firebase. However! Firebase has a way of getting direct support through their site, so I’d give that a shot!

Question: Bing offers a paid API, would Google be willing to open-the-doors for social research or return to offering an API as it had in the past?

Answer: Imma go out on a limb and assume you’re not looking to do social studies, but rather rank checking … 🙂 Lots of people want to scrape to do rank tracking, you wouldn’t be alone (Search Console does provide a much more interesting view IMO though – given that it shows impressions & clicks, rather than just ranking).

I’m not sure what the plans are there, but … we have a robots.txt (and ToS) that we’d prefer if bots & scripts honored, like we honor other site’s robots.txt files (so we wouldn’t need as many anti-bot techniques). Past that, I don’t know if it’s really that useful in the bigger picture given how much personalization is happening, and how many different search features are shown nowadays. It’s not that array of 10 URLs that it used to be… I don’t make these calls, but I’d be surprised if we set up APIs (paid or not) to do that again.

Question: Is there any situation where Googlebot would ignore a nofollow tag and choose to trust the links

Answer: I’m not aware of us doing that. You never know how things evolve over time, but my feeling is that this is a pretty straight-forward signal which is useful for a lot of things & rarely causes problems, so I’d be surprised if we suddenly ignored it.

Question: While not a direct ranking factor, would you expect that over a period of time if more people are clicking on a certain result more than others that it would rank higher over time?

Answer: We look at a lot of signals when we evaluate our algorithms, and as you know, we make a lot of updates to our algorithms (which we end up having to test). Personally, I don’t think people clicking on a site is always the best quality signal — stepping back from search, people do really weird things sometimes, and sometimes a lot of people do the same weird thing, but that doesn’t mean that it’s the best thing to do.

Question: If I check the cache of one domain, I see the cache from different language version.

Answer: Usually this is due to duplication, we index just one version and fold the other into the same database record (hah, it’s a bit more complicated than that though). However, when we can tell that they belong together (you see that with looking up the other cache URL), then we can often still show it appropriately in normal searches.

In the end though, if you don’t like this, then follow the first rule of international business: localize, don’t copy 🙂

Question: I’ve been seeing a lot of inconsistencies between different markets of Google. I.e, Google.co.za operates differently than Google.co.uk etc. Will all markets eventually be the same? Are the smaller markets like Google.co.za a bit delayed? Will they ever be on the same level as the larger ones? I’m asking in terms of the Google Algorithm and ranking factors / penalties.

Answer: For the most part, we try not to have separate algorithms per country or language. It doesn’t scale if we have to do that. It makes much more sense to spend a bit more time on making something that works across the whole of the web. That doesn’t mean that you don’t see local differences, but often that’s just a reflection of the local content which we see.

The main exception here is fancy, newer search features that rely on some amount of local hands-on work. That can include things like rich results (aka rich snippets aka rich cards), which sometimes don’t get launched everywhere when we start, and sometimes certain kinds of one-boxes that rely on local content & local polices to be aligned before launch. I’d love for us to launch everything everywhere at the same time (living in Switzerland, which seems to be the last place anyone – not just Google – wants to launch stuff, grr), but I realize that it’s better to get early feedback and to iterate faster, than it would be to wait until everything’s worked out, and then launch something globally that doesn’t really work the way we wanted.

Question: Is there an exact date that Google will no longer support the ajax crawling scheme? We are trying to plan out our sprints for dev to come up with another solution.

Answer: I don’t have a date for the deprecation of AJAX crawling, but I’d expect it’s not far away — we’ve done 2 (3?) blog posts saying we’re turning it off already :-). If we can render your #! URLs, things should just work regardless, but having clean (non-#!) URLs is worthwhile not just for Google.

Question: One of my clients got a domain which had a bad history.

Answer: There’s no “reset button” for a domain, we don’t even have that internally, so a manual review wouldn’t change anything there. If there’s a lot of bad history associated with that, you either have to live with it, clean it up as much as possible, or move to a different domain. I realize that can be a hassle, but it’s the same with any kind of business, cleaning up a bad name/reputation can be a lot of work, and it’s hard to say ahead of time if it’ll be worth it in the end.

Question: Error 406 and 403, does Google interpret this as page errors?

Answer: We tend to treat all of the 4xx errors pretty much in the same way, they cause the URLs to drop out of the index. I don’t think it would make sense to try to special-case all of these, a client-side error is a client-side error, the content isn’t there.

Question: hreflang tag, is this something of an SEO requirement for us to implement or not necessary at the moment? Let’s say we are just targeting US only.

Answer: Hreflang is definitely not requried. If you just have one version of your content, it doesn’t do anything anyway. Use it if you see the wrong country/language version ranking, otherwise you probably don’t need it.

Question: More and more websites are using cookies to deliver personalized content. I am wondering when does Google consider this to be cloaking …

Answer: Googlebot expects to see the same content as the average user from the same locale would see. Personalization is fine, sometimes it can make a lot of sense, but make sure there’s enough non-personalized content on the page so that Googlebot has something to use to understand the relevance of the page for queries that could be routed there.

Past that, Googlebot tends not to use cookies, and purely from a usability point of view, not providing deterministic content to users will probably turn them away more than anything else. If they don’t know what they’ll get when they visit, how can they recommend the site to anyone else, and why should they come back? Imagine you’re an ice-cream store that gives everyone a random flavor of ice-cream, some might like it today, but you only have to give them something they hate once for them to avoid coming back.

Question: I’ve had an issue on a site where it seems they’re serving “spam” content to Google, but it’s not visible when analysing the site.

Answer: Most of the cases I’ve seen like this were hacked sites where a hacker was cloaking to Google. Getting hacked is no fun, to put it mildly :-/. Sometimes when you don’t see that spammy content anymore, they might just have fixed the hack, but Google still takes a bit of time to update all of the indexed pages. If you’re savvy and you see this happening to someone’s site, drop them a note so that you know they’re aware of the issue, and even if they already know, they’ll appreciate the heads-up.

(Sometimes that’s hard, of course, I’ve also seen lots of sites that claim they weren’t hacked when it’s pretty clear that they are… can’t help everyone, I guess.)

Question: Google operates Chrome. Why not use Chrome to highlight those nofollow, untrusted links?

Answer: When I browse the web with a plugin to highlight nofollow links, it’s pretty weird … I don’t know if that would really help users, or just confuse them more. If you have paid links, I’d just annotate them clearly on the page, so that users know too. Most of the time, I bet users don’t care if something’s an affiliate link that you get paid for, and your site doing a sensible disclosure of that probably gives you more of their trust in the end (at least that’s the case with me).

Question: Any timeline on when we’ll get the search console API with 15 months of backfill data?

Answer: We promised “soon” but I don’t know what the actual time will be. In the meantime, I’d just use one of the existing tools to archive the data on your end, that way you’ll have even more data once the API is around :).

Question: In the new search console performance report it’d be great if there were some way to see a value akin to quality score in AdWords for pages

Answer: We’ve talked in the past about adding some kind of “quality score” there, but ultimately that would almost need to be an artificial score … the better measure is relevance (a site can be high quality but still not relevant to some queries), and that’s visible on a per-query basis in Search Analytics in the form of ranking position :).

Question: It was reported that having a noindex, follow for a page will eventually get treated as a noindex, nofollow.

Answer: I’m not aware of the nofollow/index vs nofollow/noindex causing problems for sites, so my take is that this is more of an academic difference rather than a practical one :). In the end, if you don’t want a page seen as a part of your website, well, we won’t see it as a part of your website :). I don’t think there’s anything the majority of sites need to do to work around that. If you care about this, then we can probably already crawl your site just fine through the other links across your site.

Question: Many SEOs recommend utilizing the noindex tag at a page level vs. blocking with disallow in robots, as supposedly robots prevents the passing of link equity since Google cannot visit the page to asses link flow, and the noindex allows Google to crawl the page.

Answer: Regarding robots.txt vs noindex or canonical: the problem with robots.txt for that is that we don’t know what the page actually shows, so we wouldn’t know to either remove it from search (noindex), or to fold it together with another one (canonical). All links to that robotted page just get stuck. For canonicalization, I’d strongly recommend not using robots.txt. If crawling is causing real problems (server overloaded & very slow), then use the crawl-rate setting instead.

Question: Google has said that only the slowest sites will be impacted by the coming Speed Update

Answer: I would not focus on a single score for determining the speed of your site, but rather try to take the different measurements into account and determine where you need to start working (we also don’t just blindly take one number). There’s no trivial, single number that covers all aspects of speed. I find these scores & numbers useful to figure out where the bottleneck is, but you need to interpret them yourself. There are lots of ways to make awesome & fast sites, being aware of an issue is the most important first step though.

Question: Regarding disavow files: Do you need to verify all versions of your site (http, https, httpswww, httpwww) in Google Search Console and upload the disavow file to all versions of the site?

Answer: No – a disavow is between two canonical URLs essentially. If the https://www version is the canonical for your site, that’s the one where the disavow is processed. (Caveat – sites with bad canonicalization can have pages on various versions, so if that’s the case for you, I’d just upload the same file on all of the indexed versions while you get those rel=canonicals fixed :-)).

Question: f a page has review Structured Data (SD) on it with, say, manually put “reviewCount”: “6” and “ratingValue” “4.8” without such review info visible on the page. But the SD cites a link to google map where the 6 reviews and value can be found. Is this considered spammy or not…?

Answer: yeah, if people can’t add reviews, that’s not in line with our guidelines.

Question: We are using responsive design for website in general except that we hide the regular CTA buttons and instead serve the buttons with the tel:phonenumber links to enable click-to-calls from mobile. How would this affect the indexing with our site goes to Mobile-First Indexing?

Answer: should be a non-issue. We’ll still see everything (assuming responsive site), so that should just continue to work.

Question: With regards to local searches, how strict is duplicate content?

Answer: To me, this sounds like “how can I make doorway pages” (sorry :)) — well, you shouldn’t make doorway pages, they’re a bad user experience, and against our webmaster guidelines. If you don’t have unique information to add to a page other than a city-name, then I’d fold those pages together and instead make a single (or few) really strong pages instead.

Question: Why not offer API access to links data in Search Console?

Answer: In which ways would this make your site better?

Question: is making AMP pages a solution if I got slow RWD version?

Answer: Sure, you could do that. However, you can also make really fast RWD pages, or you might be able to move some pages to pure-AMP. Sometimes, the right approach is to take the time to do things right, rather than to find yet another intermediate step that gives you more work in the long run (maintenance, doing it “right” later on anyway). AMP is certainly a great way to get fast pages up, but it makes sense to think about your longer-term goals too, which might be to move completely to a fast framework, which might be AMP, or something else.

It might also be that switching on AMP is very low-effort and gives you enough gains to make it worthwhile (eg, some CMSs make this really easy nowadays), so you could defer the longer-term plans to later on without investing too much into an intermediate step.

Question: Can SEO suffer if Google Fetch and Render doesn’t interpret my CSS properly

Answer: I don’t think that would be the case. It makes monitoring a bit harder (you have to remember that it’ll look weird when you check again later on), but that’s more “your problem” than anything else. There’s also a chance that some browsers might run into similar issues, so I’d double-check that it’s really just Google-specific.

Question: We always try to mimic Googlebot when conducting crawls which means we use the Google smartphone & desktop user-agents when manually going through the site. We get blocked.

Answer: Yeah, lots of people run fake Googlebots, and hosters / CDNs sometimes end up blocking them. There’s not much we can do about that, it’s something you have to work out on your end.

Question: Let’s say that you have a website you would like to position both in terms of country targeting and language targeting, but you’re limited to one implementation from these two.

Answer: I wouldn’t look at this from an SEO POV, I’d look at it from a business / marketing point of view. Ranking is worthless if you’re not visible to users who are relevant to your business & who will convert when they visit. Find your audience, find out how you want to engage internationally, and that will determine your web strategy. Starting with SEO and hoping it results in a business strategy is not going to work.

Question: If some site gets the links manual action and it has hreflang atrribute to other site. Will it affect the other site also?

Answer: You know, the better approach is just to fix the issue, rather than to try tricks to work around them (trust me, we’ve seen lots of tricks over the years).

Question: Now sites are using prerender and Precaching a file for better performance for user. Do you think it is considered as a good or bad move?

Answer: Proper prerendering isn’t trivial, but I’ve heard of cases where it does significantly speed up the first load of a site, so I wouldn’t exclude it as an option off-hand. It’s not easy, but it can be done.

Question: How should the x-default hreflang be used? I’ve seen sites use it to link to sites prefered language (usually English) but from what I understand the intended use is to link to a language selector/picker page.

Answer: It’s basically for all country/language versions that you didn’t explicitly mention. It could be a language/country-picker, it could be an automatically-redirecting homepage, it could be your preferred “international” version, whatever you want :). If you don’t specify one, we’ll guess based on the other items specified, which can be fine too.

Question: Let’s say we have a hiking website with lots of tour landing pages. Essentially all pages are describing tour to same point of interest.

Answer: That sounds a lot like doorway pages … I’m a big fan of having fewer stronger pages, rather than many similar pages.

Question: It’s said that Googlebot will follow up to 5 redirects at once before giving up and coming back later. When Googlebot comes back, does it continue at that 5th redirect or does it start over? What’s the most hops it would eventually follow and count?

Answer: Yeah, we’d start at the last stop. I don’t know where we’d ultimately stop, I think someone once set up test pages for that (it’s hard to really measure with artificial setups like that though). Practically speaking, if you see a bunch of chained redirects from links on your site to pages within your site, and you care about those pages, I’d just clean that up.

Question: Are noindex directives in the X-Robots-Tag HTTP header acted upon quicker than noindex directives in a robots meta tag?

Answer: Once we see a noindex, we’ll take that into account regardless of where we spot it. The ones that take a bit longer are when we have to process JavaScript before it’s visible, but if it’s in the head from the start or in the http response, that’s all the same to us. Sometimes one just works better for your processes than the other (eg, if you have non-HTML content like PDFs).

Additional Questions Answered by John Mueller

After posting the answers to the first 47 questions, John went ahead and answered more questions. So, here are the additional questions and answers. The questions are somewhat edited, as some of them were really long. But I didn’t edit any of the answers.

Question: If I have a site that displays specific products or events based on the browsers physical location – how can I be sure that Googlebot is able to discover that content for areas outside of mountain view, CA?

Answer: Googlebot primarily crawls from Mountain View, CA (or at least, that’s where the IP lookup services tend to point), so if there’s something unique to other locations that you provide on your website, it might not see that content if you never show it to other users. The recommendation here is to make sure that your pages have significant generic content that’s accessible from everywhere, so that we can rank your pages appropriately regardless of the location. Usually this just means you have to have a mix of personalized & generic content on your homepage / category pages. The detail pages are often accessible from anywhere anyway, so that’s less of a problem.

Question: Person’s client for legal reasons needs to keep website out of the SERP in a specific country. How should he go about it?

Answer: You can’t keep it out of websearch in individual countries. At most, you can deliver generic content for search and put a noarchive on the page so that people can’t look at the indexed version, but you can’t prevent it from showing up at all.

With regards to countries, we expect Googlebot to see the same content as other users in that region wold see, and usually we crawl from the US, so if you’re blocking users in other countries, that’s not really nice, but still OK with our guidelines. However, if you need to block users in the US and still want to allow Googlebot’s crawling from the US, that would be considered cloaking and against our guidelines.

I realize this can make things harder, but so far most of the use-cases haven’t been extremely compelling (happy to bring up anything awesome & critical that breaks with this setup, if you want to send it my way), and being a US-based company, there are also sometimes things like legal issues/policies involved …

Question: Most of the tools Google provides around load time is mobile focused. Do you have any insight or recommendations about desktop load times and whether the effort to go from 3 seconds to 1 second on desktop is a significant boost, particularly outside of the ecommerce realm?

Answer: For speed, the focus on mobile is two-fold: on the one hand, that’s where the people are (and will be even more), on the other hand, mobile devices are often more limited and see effects of speed much stronger (slower networks, slower CPUs, less RAM). Improving desktop speed is certainly also a thing to look at, but if you can make a big dent into mobile speed, the desktop side often just follows on its own.

Question: What would happen if Wikipedia removed the nofollow attribute from their external links?

Answer: I imagine the main effect would be that Wikipedia gets overrun with people dropping links, and they end up implementing much stricter guidelines & checks. I’m sure there would be a period of flux in search from that, but the web is dynamic, things change all the time, and we have to deal with that anyway, so I doubt things would collapse 🙂

Question: I own 100 domain names other than my main domain name where my main website is. Is it OK to 301 redirect them to my main website’s home page? Or will Google see this as spam?

Answer: Redirect away. I doubt you’d get SEO value from that, but that’s kinda up to you. For example, if you might use a domain name in an ad-campaign to have a memorable URL to show, even if it ends up redirecting to your main site in the end.

Question: Does Domain Authority exist?

Answer: Of course it exists, it’s a tool by Moz. https://moz.com/learn/seo/domain-authority

Question: Will Google rate page quality lower when there’s a large quantity of links that serve non-200 status codes (noted in this example as redirects). If yes, what if those links are set to nofollow?

Answer: Personalization & redirects are fine, but you need to make sure that Googlebot has enough generic content to rank the pages regardless.

Question: You’ve mentioned the search console API will be updated soon with data past 90 days… Will the API be the same or after you changing it, meaning many of us will need to rewrite our apps and scripts

Answer: AFAIK it’ll be the same API, just with data for the older datapoints available.

Question: Sites seem to be re-created every thirty days or so in my client’s industry. Is it worth it to submit them as spam?

Answer: My guess is these don’t get a lot of visibility, but you might find them if you explicitly search for them. If that’s the case, I’d just ignore them. If you’re seeing them being more of an issue in normal search results, I’m happy to pass them on to the team too, if you want to send a bunch my way.

Question: In the past when we made changes to content or created new pages on our sites we could use Fetch and Render in order to get those pages index or recrawled. Those changes typically showed up within 24 hours, now its taking closer to a week and sometimes up to two weeks for pages to get indexed. What is going on with data centers that’s causing that?

Answer: I’d generally focus on the more scalable & normal ways of getting content indexed & updated, rather than submitting them manually. TBH I’m kinda surprised so many people do that manually when the normal processes should work just as well – save your time, just make sure your CMS uses sitemaps & pings them properly.

Fetch and Render then submit to index is just a smart process to go through to make sure the site is behaving as expected for the bot, to not do so seems ‘abnormal’ to me.

Question: We are seeing evidence of data centers not consistently reporting sites in the index. While not an issue for a local site, a national site could negatively be impacted by this. What is happening, as an example, is a page is getting indexed in the East coast data center and the west coast data center is showing it not in the index. We have seen examples of this consistently. Again, what is happening with indexing and crawling the internet that is causing this new behavior on Google?

Answer: Regarding datacenters: yes, we have a bunch of them, and sometimes you can see differences across them. That’s kinda normal and has been like that since pretty much the beginning. Usually that settles down on its own though.

Question: Do you think pages with 1 year old content are loosing ranking beacuse Google wants to show fresh content every time?

Answer: No. Fresher doesn’t mean better. Don’t fall into the trap of tweaking things constantly for the sake of having tweaked things, when you could be moving the whole thing to a much higher level instead.

Question: SEOs tend to believe that anything not loaded within 5 seconds for JS websites will not be seen.

Answer: We don’t have a fixed timeout. It’s also pretty much impossible to know how long Google will take to render something, since we cache so aggressively. It might take 10 seconds to load in a browser, but if we can do that all from a cache, it might be significantly faster.

Question: What is your stand on “white hat cloaking”?

Answer: My hunch is that they’re adding significant complexity that will bite them in the end. As soon as you start doing things like that, you have a lot of conditional code running on your server, you can’t easily move to a CDN, and if the next team does a redesign of your site, chances are they’ll have no idea what that code was meant to do and either break it, or just remove it. Also, any time you cloak subtly to Googlebot like this you run the risk of something breaking on your end, and you not noticing it at all (eg, maybe the code starts serving 500 errors to Googlebot, but all users see the site normally). There are better ways to improve crawlability than to add so much complexity.

Question: At what point does the use of machine learning make it impossible/difficult to isolate complex issues, provide concrete feedback, know what the individual factors are in the algorithm, etc. (outside of the obvious build for the user)?

Answer: That is a big problem, for sure. I think it helps to have discrete systems that you can debug individually, at least then you can work back towards which model was confused, and find ways to improve that.

Question: es Google care if we use 307 vs 301 redirects for http > https?

Answer: The 307 redirect is probably a 301 anyway :-). Browser show a HSTS move from HTTP to HTTPS as a 307, so that’s what you might be seeing.

Question: Do you have any idea about when the new GSC will have all the new features?

Answer: We’re taking it step by step, and trying to rethink the functionality rather than just blindly swapping in a new UI. You’re going to see the old GSC for a while, don’t worry 🙂

Question: For hreflang, I’ve never seen an error for en-uk in GSC. I know it should be en-gb, but being this country code isn’t used, or rather is reserved in ISO 3166-1 alpha-2 is Google correcting this on their end and that’s why we don’t see errors?

Answer: Sometimes we can figure that out :). This doesn’t work if the wrong code is a legitimate country though, eg if you use “es-la” for Spanish/Latin America, we’re going to see that as being for Spanish/Laos instead.

Question: If a large website offers products under several white-label agreements, and spins off unbranded “clones” on custom domain names with all appropriate canonical tags to the original source on all product pages is there a point where Google would ignore the canonical tags and/or consider these part of a spam network?

Answer: Canonicals are fine, but what would generally happen is that we just index the canonical version. IMO that’s the right thing to happen anyway. If you want a site to be seen as a unique site, it should be, well, a unique site. Also, often having a single really strong site can be more worthwhile than having many “watered down” sites that show the same / similar content.

Question: I was wondering how you define the difference between a mobile first index and a mobile only index?

Answer: Probably just semantics :). We index desktop-only content anyway, I wouldn’t want it to come across as us not indexing that anymore.

Question: At one point in the past, it was indicated the HTTPS “boost” only checked the protocol not the certificate. Has that changed?

Answer: That’s correct, we don’t differentiate between valid certificates for websearch.

Question: I wonder whether URL structure of canonical tag and hreflang tag should be the same.

Answer: The cleaner you can make your signals, the more likely we’ll use them. This is critical for hreflang, where we need to have the exact URL indexed like that in order to use it as a part of the hreflang set.

Question: If a brand builds communities, is there a way to submit these new roads other than waiting for Google earth to map them and then maps to inherit those new locations?

Answer: I don’t know how that’s handled in Google Maps, I’d check in with their help forum.

Question: What’s the piece of SEO advice you hear often that makes you cringe because it’s so wrong?

Answer: Most wrong SEO advice isn’t actively harmful to a site, it doesn’t cause problems for a site in the long run, it’s more that people are sent off to do something that has little or no effect at all (eg, 301/302, subdomains/subdirectories, valid HTML, code:text ratio, keyword frequency, etc.). My problem with these is that it sends people off to do something useless rather than helping them to find real, long-term positive things they could do for their site. It’s like telling a business to change the type of paper they use to print their invoices — it’s sometimes a lot of work, nothing really changes, you could have spent that time doing something more useful instead. On top of that, the time spent arguing about these items is even more lost time. If you care strongly about one of those, include them in your next redesign; your time is precious, don’t waste it with bikeshedding.

Question: I know that we all should make our sites as technically sound as possible, which means addressing every issue we can, but are there 5 or fewer upper-tier issues that should come first and foremost before addressing other things?

Answer: Luckily, most modern CMSs make great websites right out of the box, so a lot of the old-school issues with smaller sites have fallen away. The main issue I see on small business sites is that they don’t tell you what they want to be found for, they pay for a graphic designer, and forget that you have to speak in the language of the user, not just make something that looks great. No need to keyword-stuff, but mentioning what it is you want to be found for ONCE on a site can make a big difference :).

The second kind of technical issue I get called for by the crawling & indexing team (they regularly send issues with sites our way, we contact them through Search Console messaging) is sites just plain blocking Googlebot, or some of our Googlebot IP addresses. It’s kinda basic, but if your hoster / sys-admin is blocking us, well, you’re going to have a hard time getting crawled, indexed, or ranking. Happens to small sites & big ones :-).

Apart from that, a somewhat niche technical issue I’ve seen recently is that some kinds of scripts (including some from Google) inject non-head HTML tags into the top of the head on a page. For us, this implicitly closes the head, and brings all of the meta-tags into the body of the page. For hreflang, that often results in those annotations being dropped completely. If you use hreflang, I’d double-check the rendered source with the Rich Results testing tool (it’s the only one that currently shows the rendered source), and check that there are no iframe’s or div’s above the hreflang annotations.

Question: I work with a large publishing website (more than 500,000 pages of unique content). In an effort to optimize for voice search, we are creating thousands of pages to answer single question/answer pairs with question/answer schema.org markup. While these pages are starting to generate lots of traffic, we are concerned that it could hurt our traffic elsewhere.

Are pages with single question/answer pairs considered low quality by Google?

Answer: If you want to go down this route, I’d try to weave Q&A content into your normal content instead of making individual pages for each Q&A. That way, regardless of how the algorithms evolve, the pages will continue to be useful & have enough to stand on their own.

FWIW I don’t know if it’s really worthwhile to optimize a site for only voice search just yet, that seems to be a really big bet that might not pay off in the end. Instead of doing that, if you have a lot of Q&A content, I’d look into voice-actions and ways of creating a bot based on your content. That’s still a very early-stage bet too, but you have a bit more control over how everything fits together, and can often make it work across the different voice platforms.

Question: Sadly, we have a UserAgent dependant vary header implementation of the mobile version because some of the content can’t run on mobile (Flash content, yep… sad).

For user experience reasons, our mobile content is slightly different from the desktop.

Answer: What will happen is we’ll index the mobile version instead of the desktop one. So if your content is different, that’s the version we’ll use. If you’re just tweaking things on mobile, that probably doesn’t matter. If significant parts are missing, well, those will be missing in the index too … (and probably frustrate your users too, it’s not just Googlebot wanting a mobile version)

Question: I have articles on my site that cover various aspects of a topic. I would like to create questions that the article can answer and post them as separate pages on the site. Is that acceptable or will I run the risk of duplicate content penalties?

Answer: I’d try to combine this into the article pages themselves. Making separate pages for each Q&A seems like you’d be generating a lot of lower-quality pages rather than improving the quality of your site overall.

Question: Lighthouse is already a powerful SEO tool. What other rich features Google is planning to add there in near future?

Answer: Lighthouse is open-source, you can follow along what they’re working on and give your input directly there too! https://github.com/GoogleChrome/lighthouse

Question: Is there a big difference in the amount/variety of signals of “trust” that you need to accumulate for specific industries like Real Estate or Loan Pre-Approval in order to gain significant rankings compared to other industries?

Answer: I don’t think we have explicit thresholds or anything for individual niches, that wouldn’t really scale to the whole web. However, sometimes the competition is really, really strong, and if you want to compete in those queries, you’re going to have to do a lot of work. It’s no different than anywhere else in business 🙂

Question: Our company was till the end of 2017 heavily focusing on “texts must be key to solve all SEO problems. We will optimize the technical part of your website, but content is king. Put atleast 500+ words on the home-page and about 700+ words on the subpages.” In my opinion that’s very one-sided.

Answer: FWIW I’m almost certain that none of our algorithms count the words on a page — there’s certainly no “min 300 words” algorithm or anything like that. Don’t focus on word count, instead, focus on getting your message across to the user in their language. What would they be searching for, and how does your page/site provide a solution to that? Speak their language — not just “German” or “English”, but rather in the way they understand, and in the way they’d want to be spoken with. Sometimes that takes more text to bring across, sometimes less.

Question: One of our customers reached out for us because he was curious about what the agency before “did” to his site. More than 400 Landingpages (like

Answer: The city/service pages sound like doorway pages, which would be against our guidelines, so finding a way to clean that up would probably be worthwhile.

Question: My question is on re-using old URLs to rank for new things. I’m a single product company that’s now a multi-product company. I have a product URL that ranks for a core term to my business, and I transferred it to a different page via a redirect. Can I now use the product URL to list all of my products without seeing a drop in rankings on my core term?

Answer: Usually you can’t just arbitrarily expand a site and expect the old pages to rank the same, and the new pages to rank as well. All else being the same, if you add pages, you spread the value across the whole set, and all of them get a little bit less. Of course, if you’re building up a business, then more of your pages can attract more attention, and you can sell more things, so usually “all else” doesn’t remain the same. At any rate, I’d build this more around your business needs — if you think you need pages to sell your products/services better, make them.

Question: For our clients, we try to go beyond best practices, and A/B test at least the meta descriptions to find the most compelling ones. Is this something Google is Ok with?

Answer: Go for it. I’ve seen people also test adwords, where you can try titles/snippets and get numbers fairly quickly.

Question: I recently saw your reply on a post entitled When/How did you learn Technical SEO?, and a good analogy someone posted was, “This is like asking how did you learn to build a house”.

The top poster mentioned building your own websites, learning JS. If building your own websites and learning JS was not feasible, where would you start out in 2018 with the intention of not becoming an expert technical SEO, but to understand it enough to be able to work with technical SEOs?

Answer: Understanding how search engines crawl also goes a long way, that might be something to start on with regard to technical SEO. A good way to get a feel for that is to set up a site, and to crawl it with one of the crawlers out there (Screaming Frog, Xenu, etc.). Work out where the URLs are coming from, and how you can improve what’s found.

Question: I ran a test wherein, I added some structured data to reference a translated word with multiple spelling as a canonical entity using sameAs, to see if Google might use it return the same result for the various spellings. It didn’t.

Answer: I have no idea what that would do, I bet Gary was just curious to hear the test results instead of having to test it himself :-). I assume these kinds of things will improve over time, but at the same time, we have learned that blindly trusting markup on a page is usually A Bad Idea.

Question: The search quality rater guidelines state that customer support content is important for an ecommerce site to achieve a high quality rating. If this content is present but set to noindex then would that lower overall percieved quality algorithmically?

Answer: The guidelines are meant for folks who are reviewing the search results tests, they don’t have a direct impact on rankings. Still, it’s useful to think a bit about the bigger picture, even if it isn’t reflected 1:1 in search.

Regarding noindex content — if it’s noindex, we don’t index it, so usually that just drops out and gets ignored.

Question: For schema, how deep should we go.

Answer: I don’t think there’s an absolute answer for this. I’d primarily focus on the SD that brings visible effects (that’s where you have a cleaner relationship between your work & the outcome), and for the rest, try to be reasonable. We do use SD to understand a page better, it helps us to rank it better where it’s relevant, but you’re not going to jump to #1 just because you have SD on a page.

Question: Google recently really seldom indexes my content on the test websites (if I use Fetch and render). I don’t use black hat techniques, just want to test some ideas 🙂 I was talking to two other SEOs, they noticed the same: now it’s more difficult to index the test website than it used to be. Did you change something in the algorithm?

Answer: Test websites are … kinda artificial, right? Our algorithms work best on normal websites :-). If you see an effect with a test site, I wouldn’t assume that it’s always transferable to a normal website. That makes it harder to test things (since you can’t really control all variables on a normal site), but IMO tuning our algorithms to work well for test sites doesn’t make much sense.

FWIW I have the same problems with my test sites when I try things out, you’re not alone! :-))