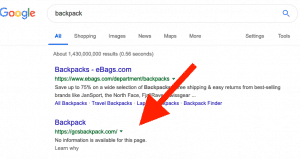

A website’s home page is ranking very well for a competitive keyword despite the fact that the website is blocking the search engine crawlers from indexing the site. A search for the keyword “backpack” in Google shows a website’s home page, GSCBackpack.com, ranking #2 for the keyword. However, Google isn’t allowed to crawl the website (especially the site’s home page), and Google is ranking the keyword anyway.

Several years ago, Google, in what I can only describe as “overzealousness”, made a decision to start indexing web pages despite the website owners telling the search engines that they did NOT want their website (or web page) in the search results. Google decided to take a more “technical” approach to the matter. They made the decision that if you tell the search engines not to crawl a website, Google will index the page anyway. In my opinion, if a website owner tells a web crawler to NOT crawl their website, then the page or website shoudln’t be in the search engine results. The page should be removed, in my opinion, and the page shouldn’t show up in the search results at all–even if it’s for a search query like the word “backpack”.

Previously, if you told the search engines to not crawl your website, that meant that your website or a web page wouldn’t show up in the search results, as it cannot be crawled. Now, even if you tell them to stay out, not to crawl, your web page can still show up in the search results, like this case here, for the keyword “backpack”. Google took it upon themselves to change the rules.

Now, if you do NOT want a web page to appear in the Google search results, you actually must ALLOW the search engines to crawl your web page. I know, that doesn’t make sense. But you must allow them to crawl the web page so that they can see the “noindex” meta tag that you’ll put on your page.

If the search engines, mainly Google, have actually indexed a web page that you don’t want them to index, then you can remove the page from Google.

In the case of the keyword “backpack”, and this page still showing up in Google’s search engine results, the site owner is blocking the search engines from crawling their home page. They’re most likely mistakenly blocking them, and, as a result, the website is still ranking well, but the search result listing shows that the search engines are not allowed to crawl it.

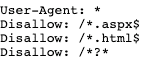

This is the website’s robots.txt file as I currently see it:

As you can see, the * on the directive is causing this. I assume this is a mistake by the site owner, and will most likely be fixed or updated. The robots.txt file (all sites should have one) typically appears at domain.com/robots.txt .