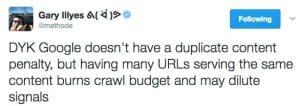

According to Gary Illyes, a Webmaster Trends Analyst at Google, the Google search engine has no duplicate content penalty. In a Tweet on February 13, 2017, Gary said the following:

“DYK Google doesn’t have a duplicate content penalty, but having many URLs serving the same content burns crawl budget and may dilute signals.” – Gary Illyes, Google

He also confirmed, during replies to his Tweet, that his statement only refers to internal pages on a website. So, for internal pages on your website, there is no duplicate content penalty. However, if you do have lots of duplicate content on your website, you’re “shooting yourself in the foot” if you don’t deal with that content properly.

Every website, according to Google, has a crawl budget. Google will only crawl a certain number of pages on your website, essentially. As a result, if you have too much duplicate content on your website, Google’s crawler, Googlebot, may not be able to crawl all of the pages. And, if you burn through all your crawl budget, they may not see the important pages. That’s just how I’m interpret this, but it seems logical to me as that’s how they’re dealing with duplicate content.

In other words, Google is not penalizing your site for this. You’re penalizing yourself. So, Google’s “passing the buck”, so to speaking, saying that it’s your fault, not theirs.

So how should you deal with duplicate on your website? How do you make sure that you’re not using up all of your crawl budget? Here is what I recommend:

– First, check for duplicate content. Use Siteliner.com to check your site.

– Decide if that dupe content on your site is needed for visitors or if it can be deleted.

– If you can simply remove the dupe content, then go for it. That’s the best option. If the content is not there, then it’s not duplicate.

– See if you can remove some internal links to the duplicate content. The content might still be there, but if it’s not crawlable or accessible via links then that can help. For example, remove the tag pages on a blog. Keep the categories, but ditch the tag pages. Or the archives by date. Those are notorious for creating these issues on your site.

– Use the robots.txt file to stop the search engines from crawling certain pages that are creating duplicate content.

– Use the canonical tag, if necessary, to help with duplicate content. This can be helpful for ecommerce sites, especially if you have products with more than one color, size, or features.

While duplicate content isn’t officially something that Google penalizes, it’s one of the top 5 issues I see whenever I am doing a technical SEO audit of a website. It could quite possibly be in the top 3 issues, and sometimes the most important issue to take care of in some cases.

And while Google says that it’s not a penalty per se–I can tell you that the results of having duplicate content issues on your site act, look, and feel as if it’s a penalty. So, if it looks like it, acts like it, and feels like it, it might as well be a penalty.